BIND 9.20 Brings Streamlined Core, Some New Features

Today, ISC is proud to release BIND 9.20.0, our newest stable branch.

Read postOn July 30, 2022, Petr Špaček spoke at the DNS-OARC38 conference about the performance effects of DNSSEC validation in BIND 9. This article recaps the highlights of his presentation for those who may be interested. The slides and a recording of the full presentation are available on our Presentations page.

Traditional wisdom tells us that DNSSEC validation uses more computer resources than non-validated responses. But is it true?

First, we have to decide on what resources we’re talking about. Then, how do we know how much we need? Let’s be scientific and run some specific tests, looking at latency, bandwidth, CPU consumption, the number of operating system sockets, and memory.

Everything starts with the dataset: realistic data is crucial. Fortunately, a real European telco gave us actual, anonymized data with a mix of landline and mobile clients.

In ISC’s tests, we looked at opposite ends of the data spectrum: a lightly loaded server handling 9,000 queries per second (QPS), and a heavily loaded resolver seeing 135,000 queries per second.

A note about QPS: all queries are not equal. Different queries require different amounts of resolver resources, depending on whether there’s a cache hit/cache miss, the answer size, and so on.

We took our huge dataset and downsampled it into smaller sets. We split them into complete sets of queries by source IP address; if the client IP address was included in the dataset, all the queries were included, and if the client IP address was not included, no queries were included. This was important because we needed to keep the cache hit/miss rate exactly as it was in the live dataset.

Our test resolver was running BIND 9.18.4 (the latest stable version at the time), and we started each test run with an empty cache. We tested two configurations: one with validation enabled, one without; everything else remained the same between the two sets of tests. The queries were simulated using the DNS Shotgun software tool, which replays a packet capture (pcap), then records the exact timing of queries and replies to the resolver.

Then we come to the harder part of our setup: the Internet. We need authoritative servers, including timing, latency, reliability, etc. – which are hard to measure and simulate, so our test server is actually measured against the live Internet. Of course, that causes lots of noise. To counter that, we repeated each measurement 10 times and then performed some post-processing.

We were concerned with resources consumed on the resolver side, so we needed tools to monitor resource consumption. We wrote scripts that scraped files in /proc and /sys and stored them on disk with timestamps; when everything was complete, we post-processed the raw test files to generate charts.

Note that the 9000 QPS is not a steady query rate like you would get from DNSperf or a similar tool. The packet rate from the pcap we used as input for DNS Shotgun jumps around, like a live network.

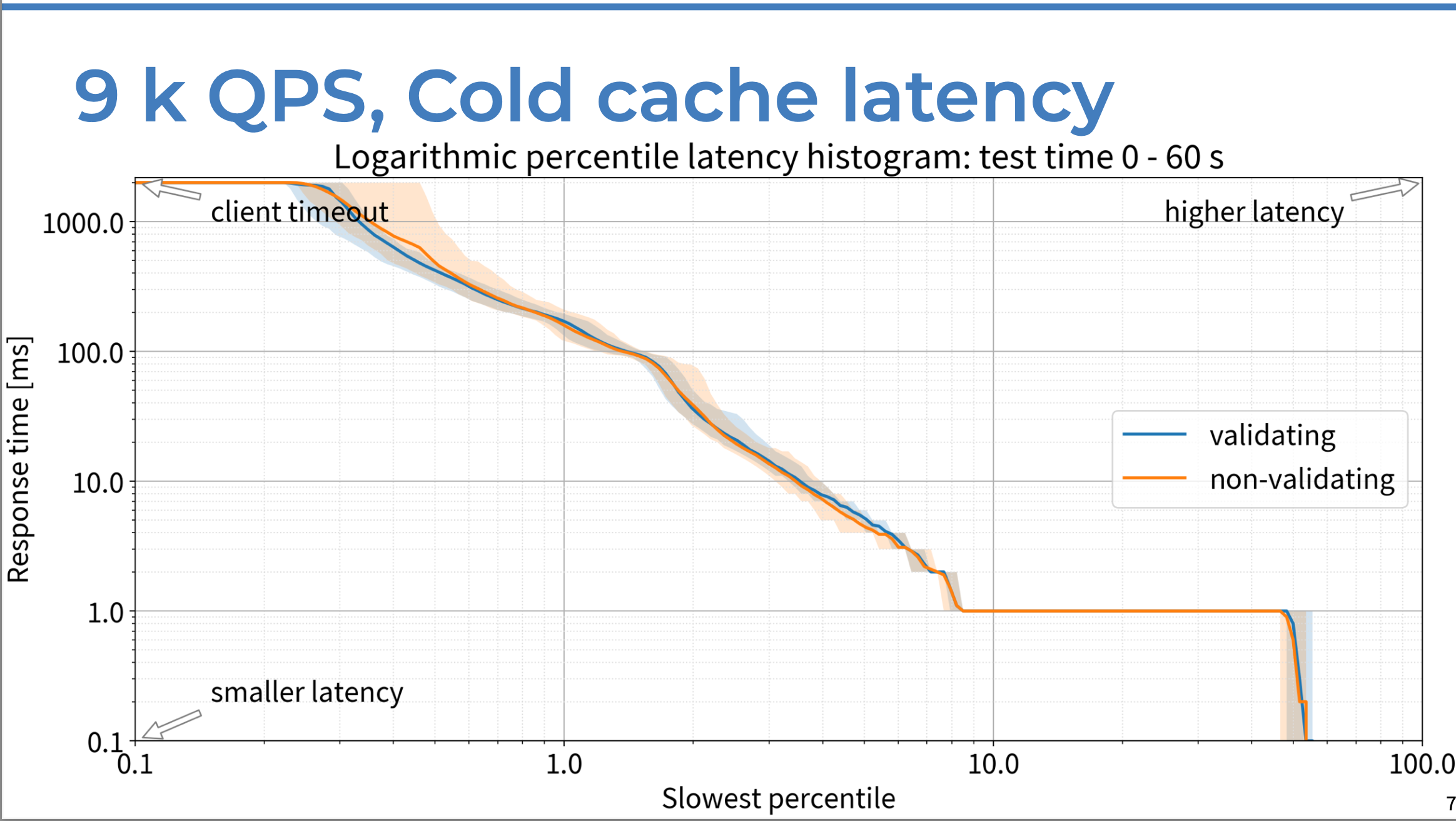

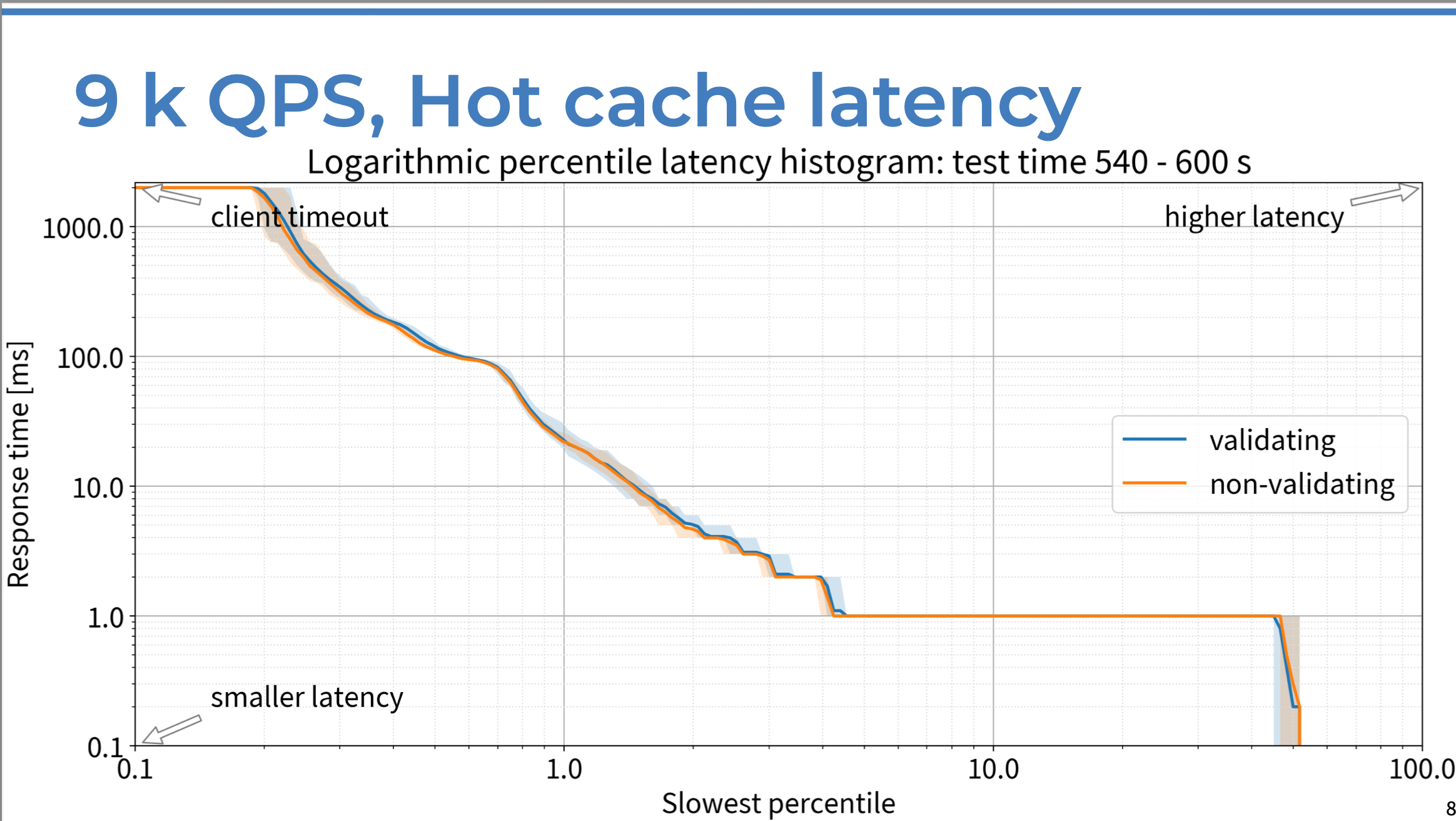

Since we’re talking about DNS, we’re obliged to be obsessed with latency. Traditional DNSSEC validation wisdom tells us that there should be a significant increase in latency because validation is complex, but our experiment doesn’t agree: the latency we saw with both validating and non-validating resolvers was practically the same. In both cases, 90% of the queries were answered within 1 ms; only 10% took 1 ms or more to receive a response, in the first minute, with a completely empty cache.

At the last minute of the 10-minute test, the chart lines are even closer – nothing to see. For 9000 QPS, DNSSEC validation causes no difference in latency.

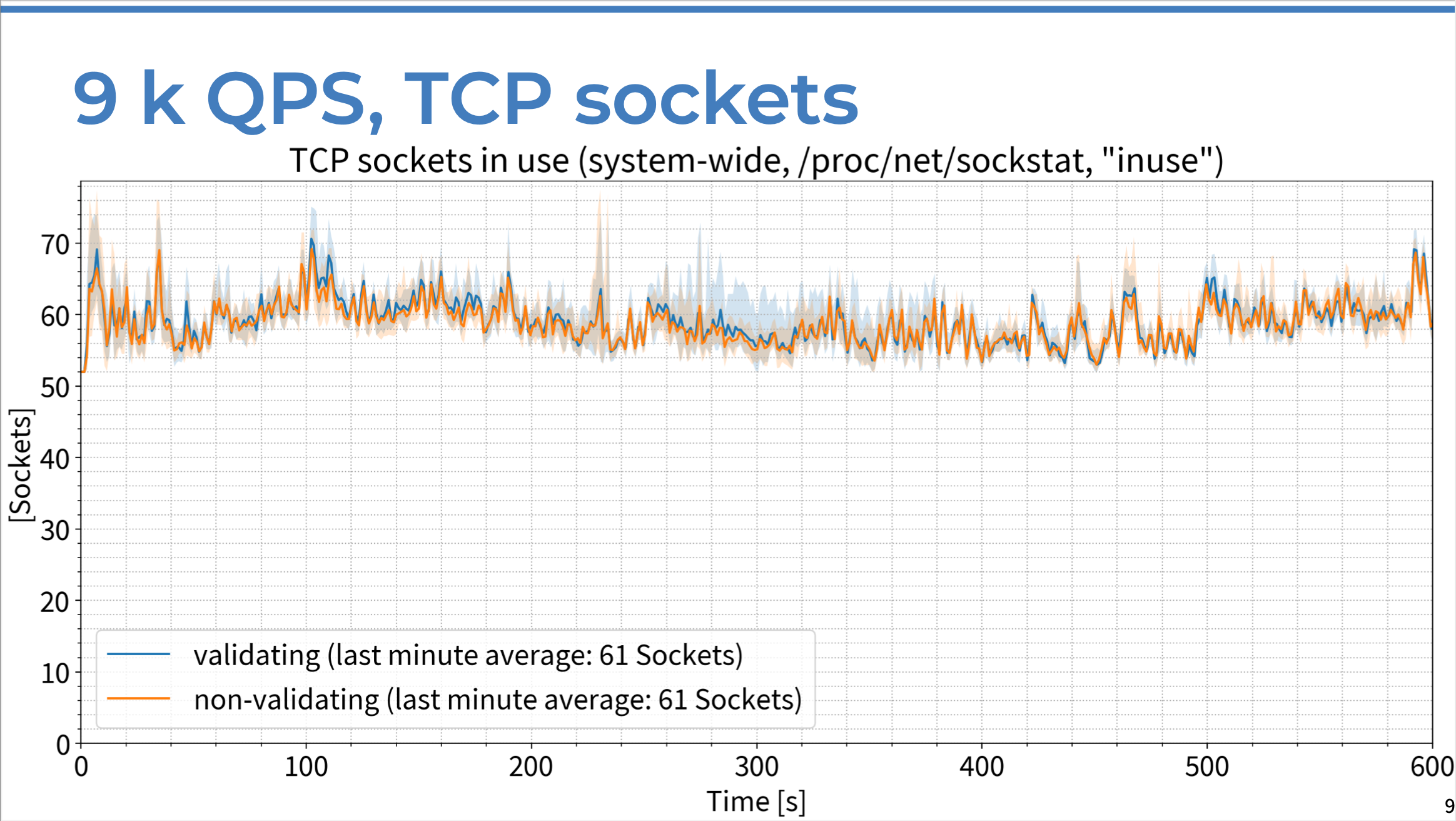

Traditional wisdom says we’ll need more TCP sockets, because DNSSEC packets are larger. To measure that, we focused on the number of TCP sockets in use, as reported by the operating system. But once again, we see practically no difference between the validating and non-validating use cases. If we focus on just the last minute and average the number of sockets in use, the numbers for validating and non-validating are exactly the same.

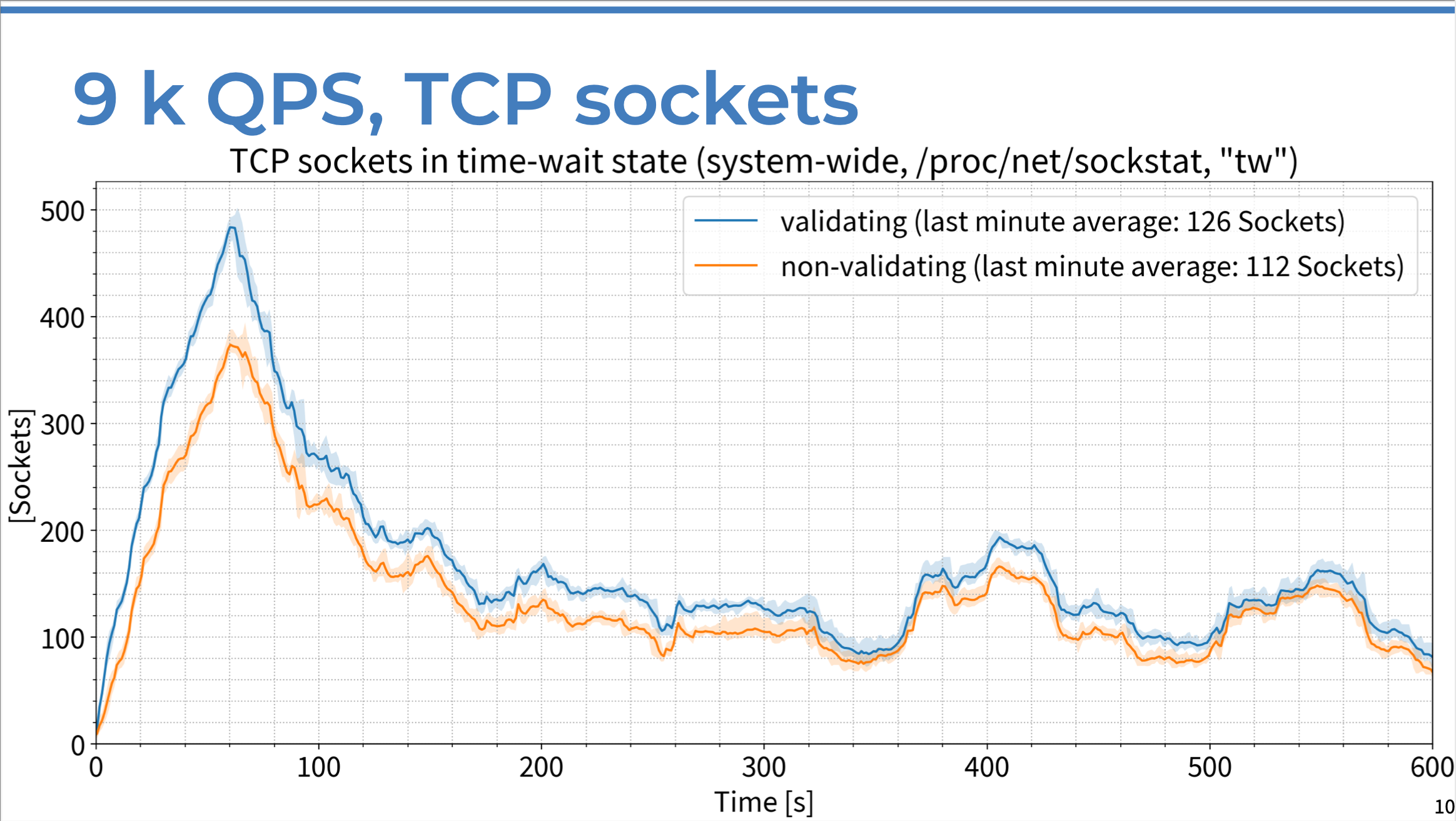

The number of sockets actually in use at any given moment might not reflect the number of sockets over the test period, since some sockets might be so short-lived that they wouldn’t be recorded. To account for this, we measured the number of TCP sockets in a “time-wait” state; that is, sockets that were closed by the resolver once a DNS transaction was finished and remained in the “time-wait” state for a short period of time after closing. This was also measured at the OS level; there is some difference, but only about 14 sockets on average on a resolver that’s handling 9000 QPS, which is insignificant.

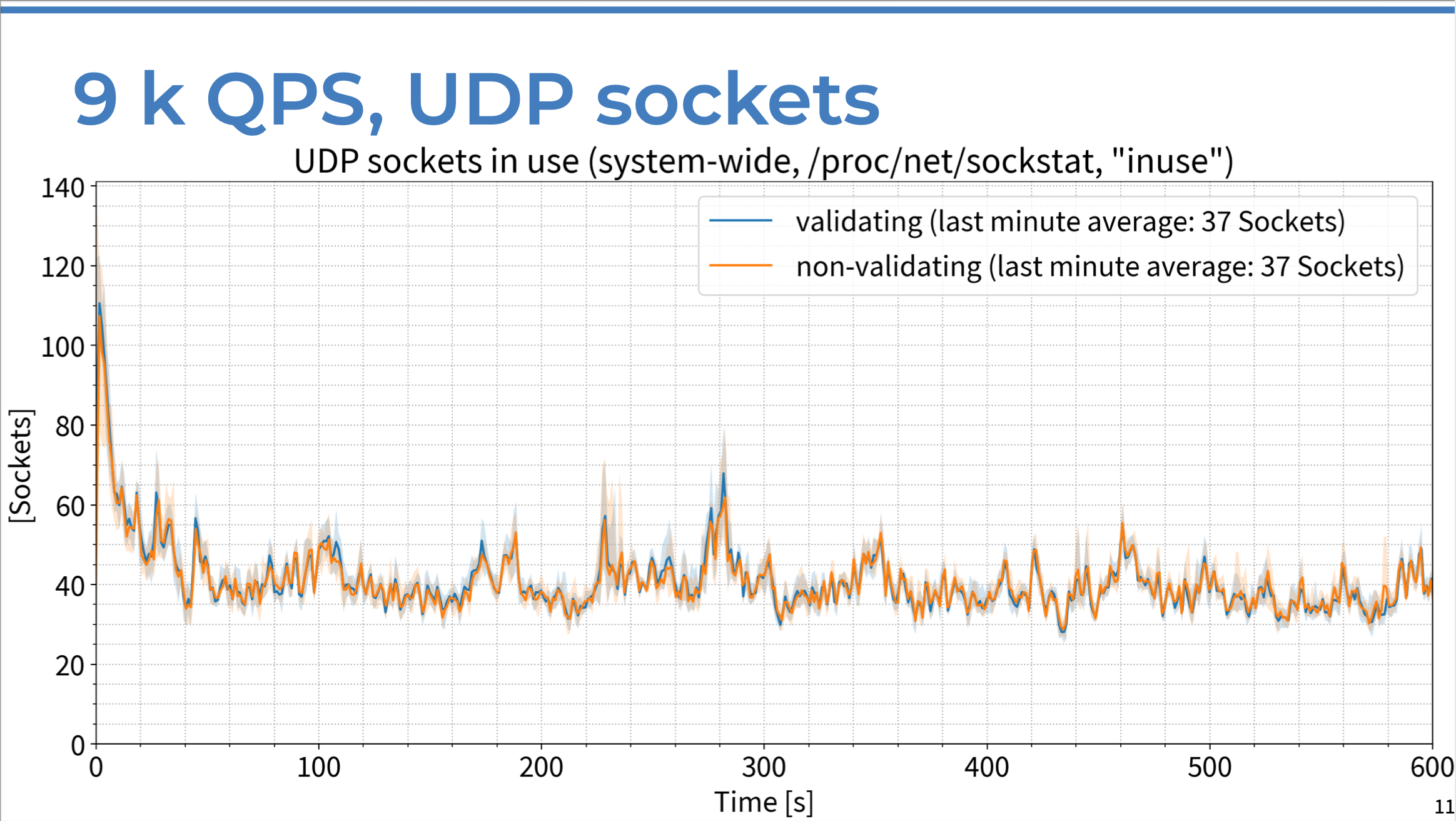

For UDP sockets, the story is the same: exactly the same number of sockets in use in the last minute of the test, on average.

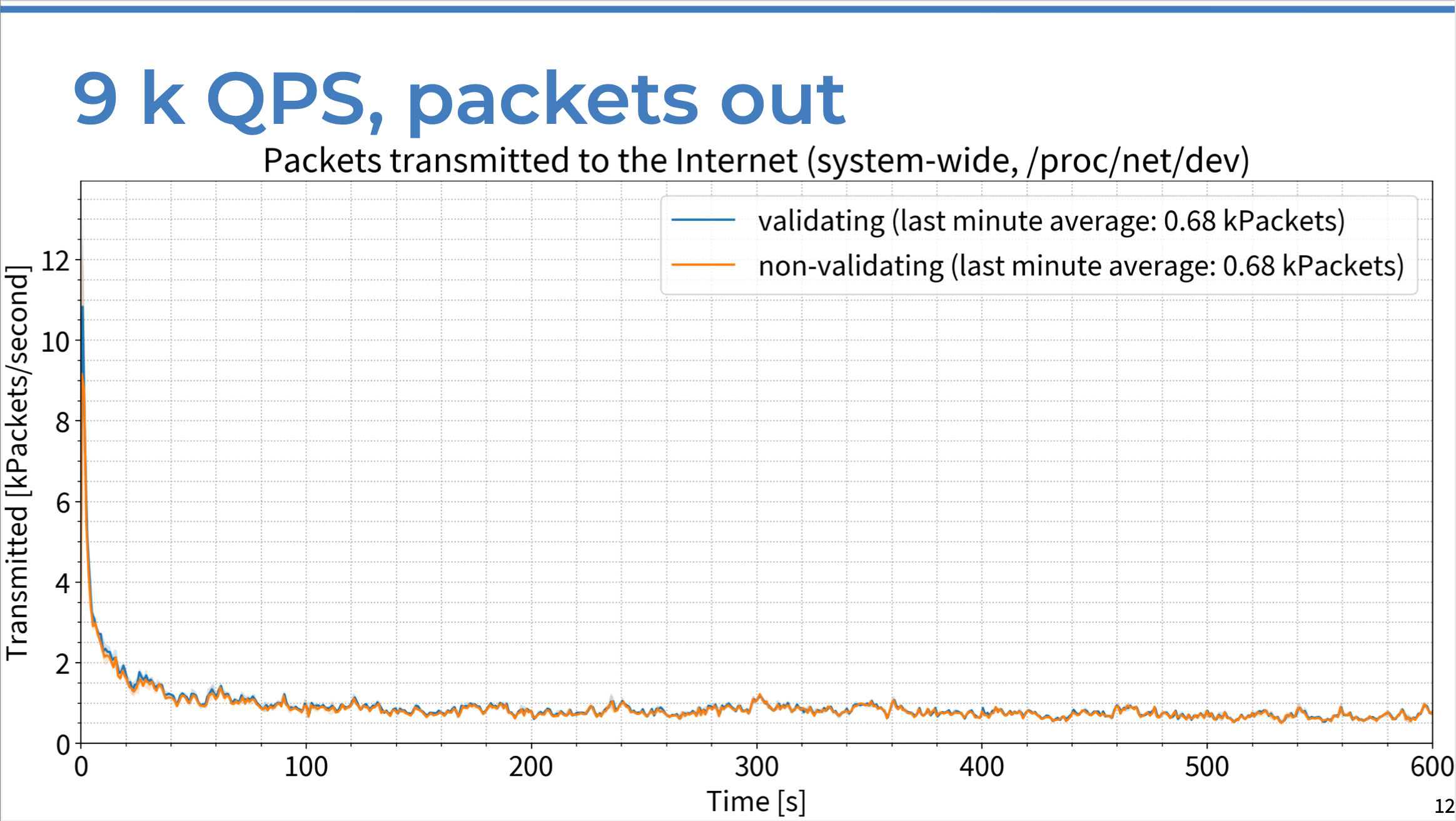

Traditional wisdom here tells us that DNSSEC validation will cause more packets to be sent; but again, this was not the case in our experiment. There was an extremely small increase in the first couple of seconds of the test, but after that we saw no difference.

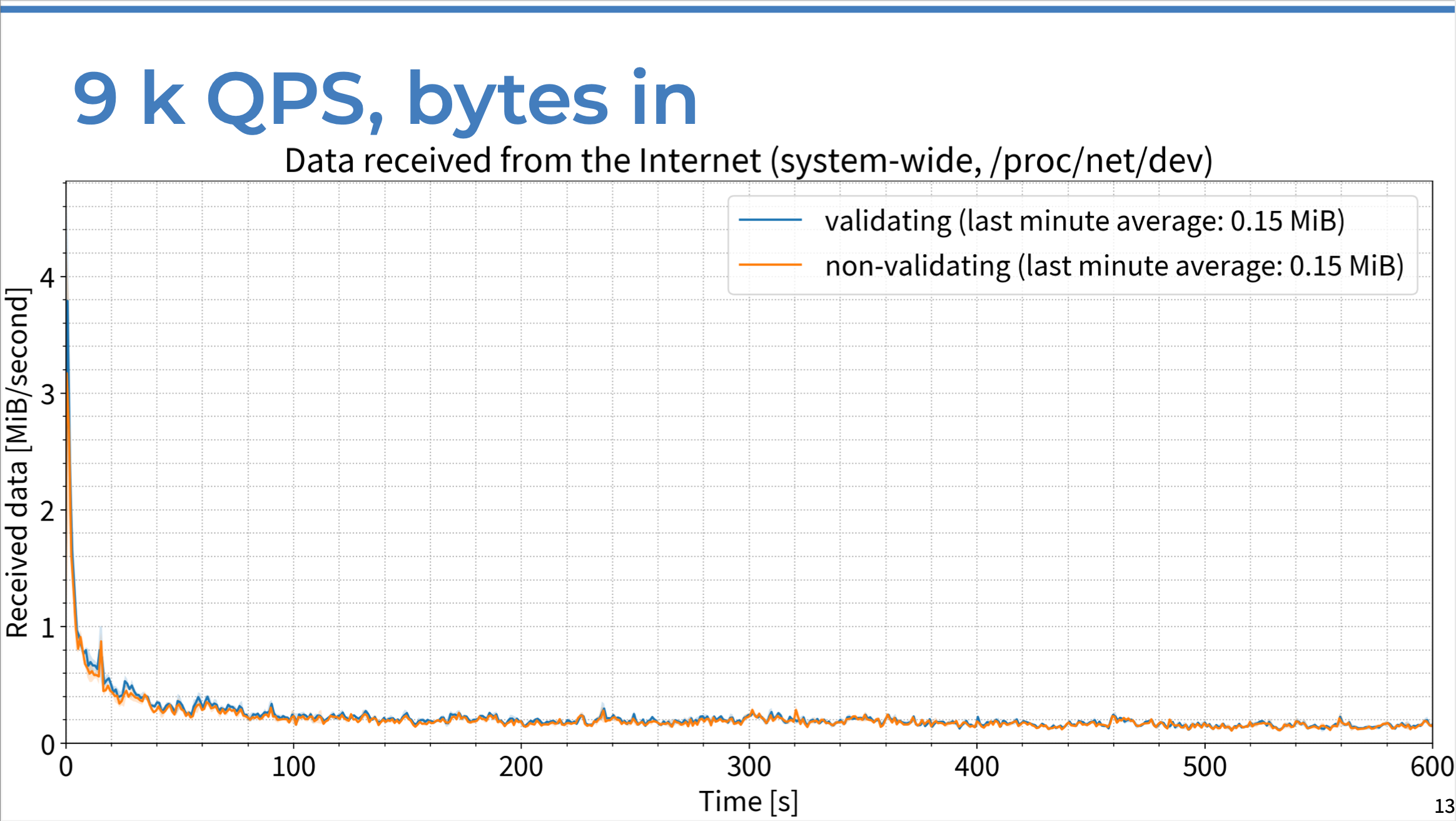

So bandwidth used must increase, right? Again, not really. We saw a tiny spike in the first second, but once again, by the last minute, there is no practical difference between validating and non-validating.

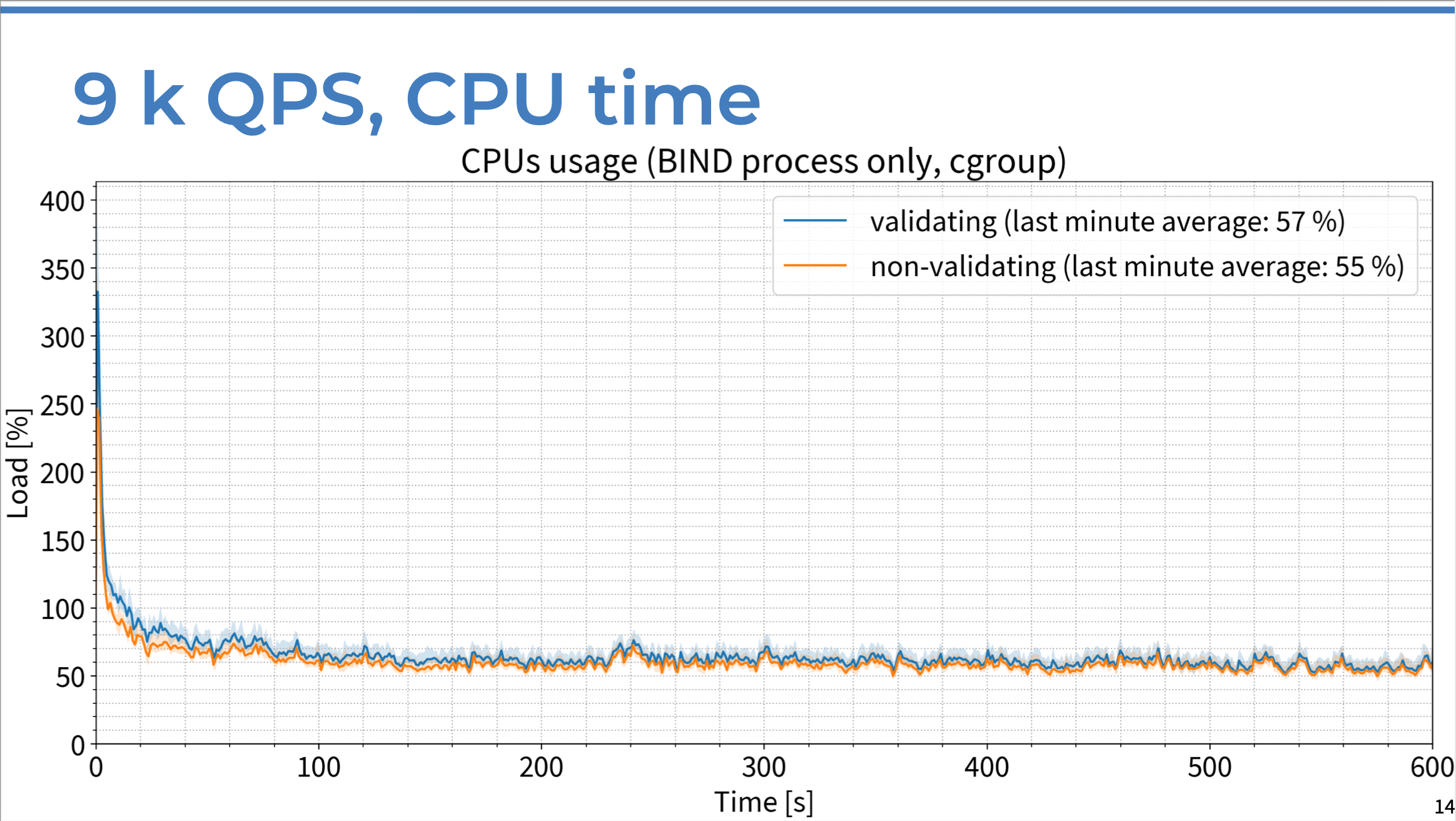

If we listen to the conventional wisdom, then DNSSEC validation must be CPU-intensive. But yet again, that is not the case. We may have seen a slight difference in the first minute or two, but the data quickly converged with the values for the non-validating configuration, and by the last minute there is a difference of only 2% on average. In this case, 100% means one CPU core, so 2% is practically nothing.

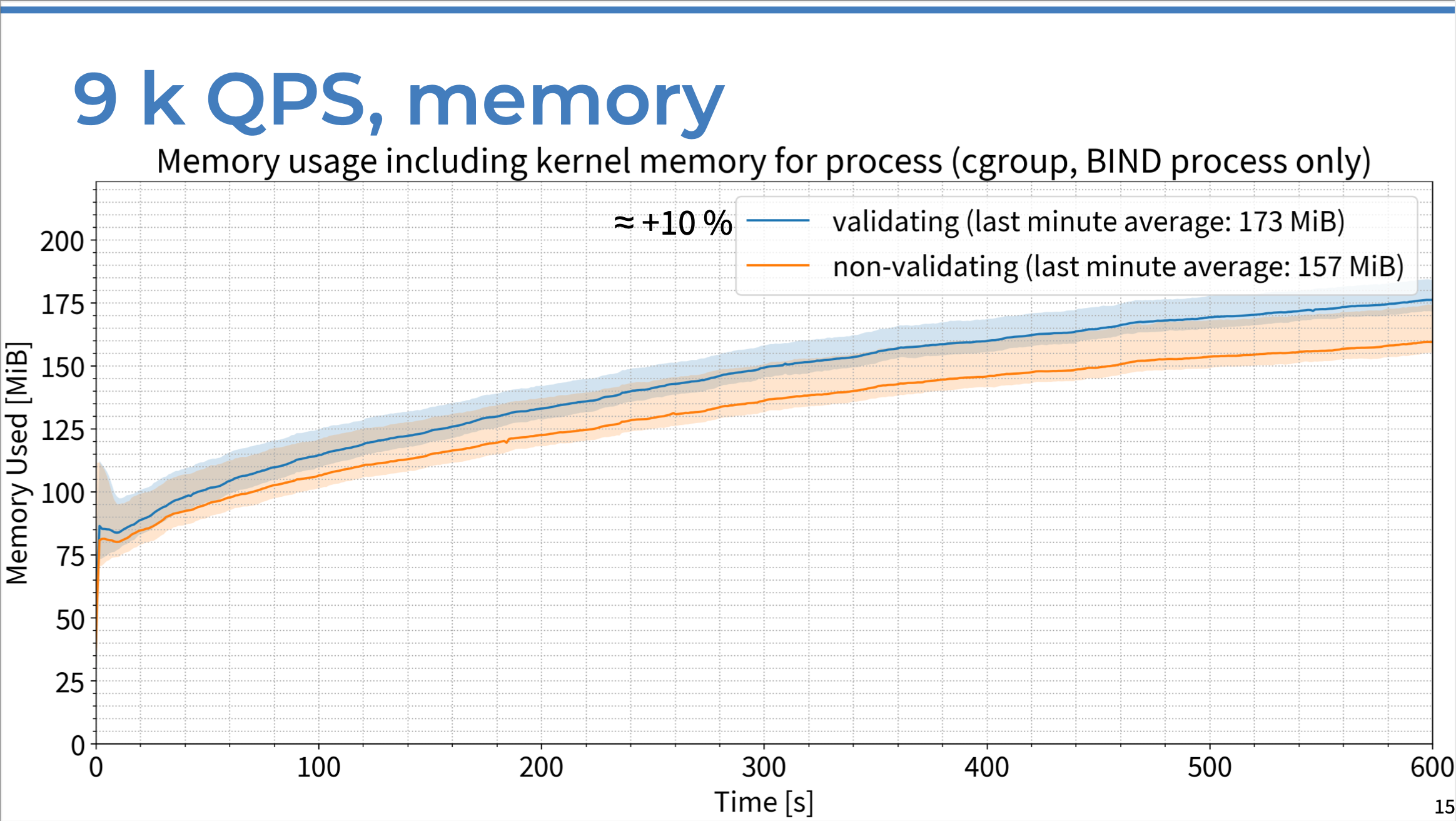

Last but not least: memory use – finally, somewhere where validating makes a visible and reproducible difference, outside the margin of error! Validation uses approximately 10% more memory on average than on a non-validating resolver during the last minute of our tests.

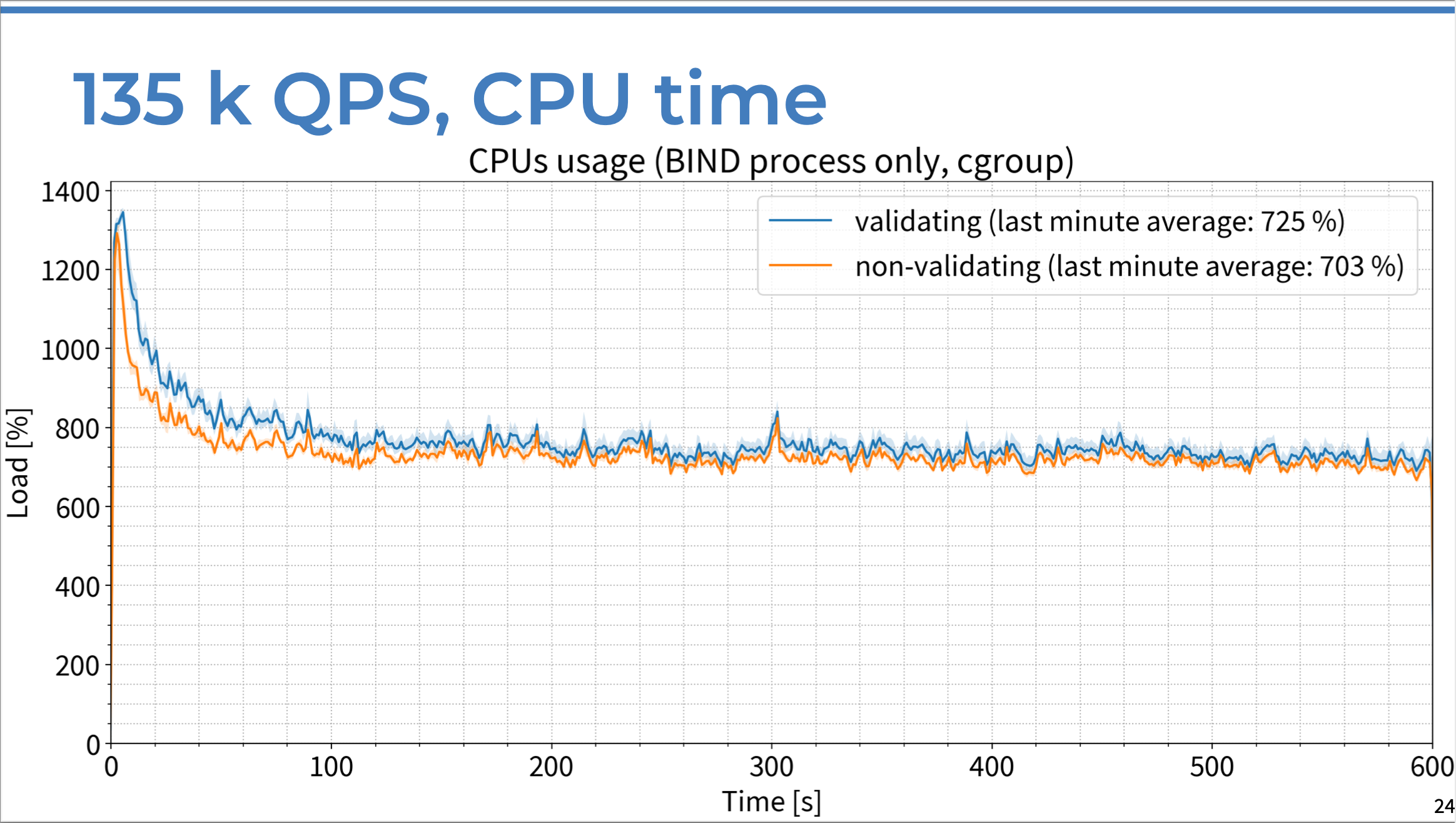

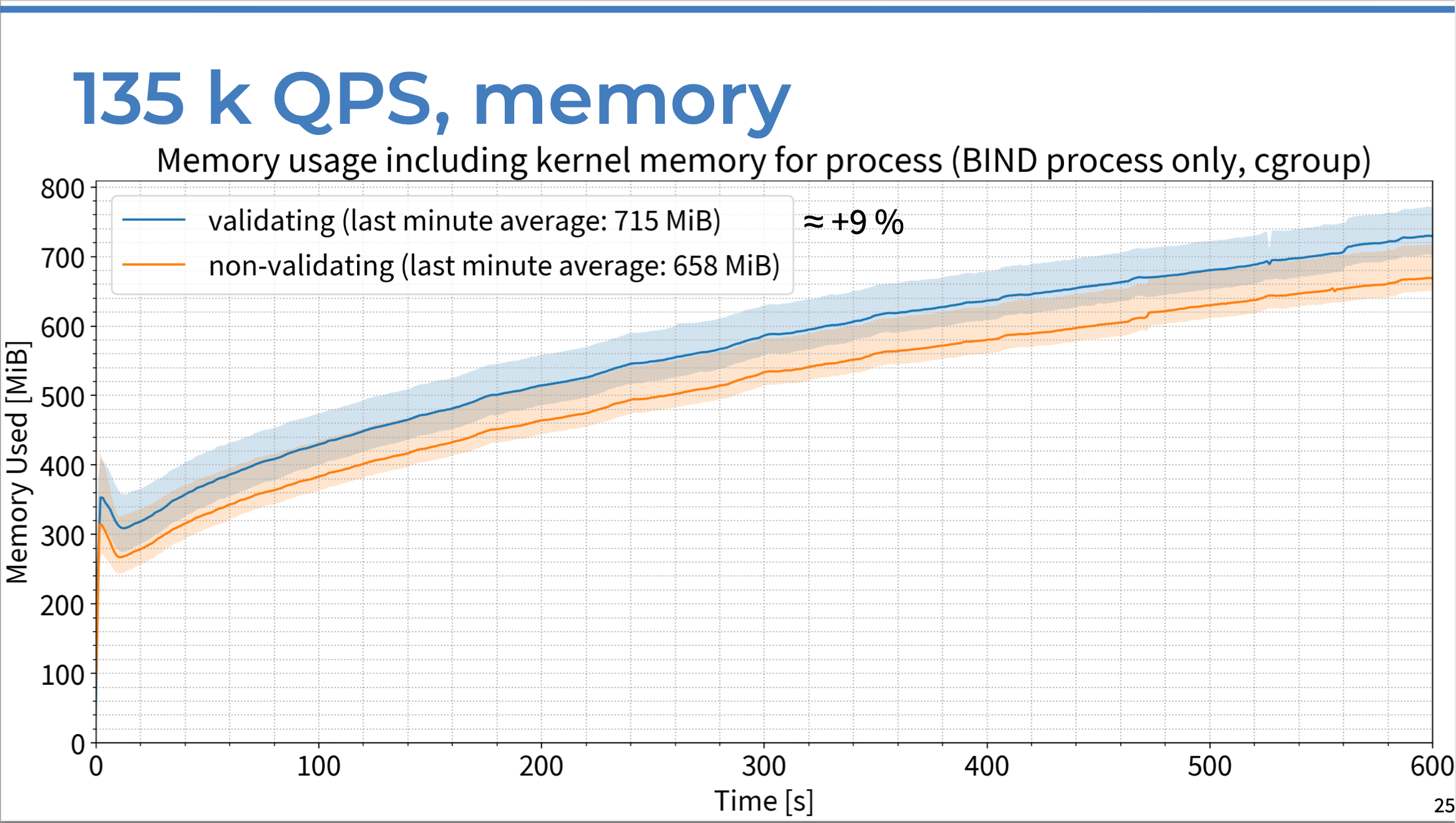

In this set of tests, we ran the exact same set of experiments as before, but this time the resolver was heavily loaded.

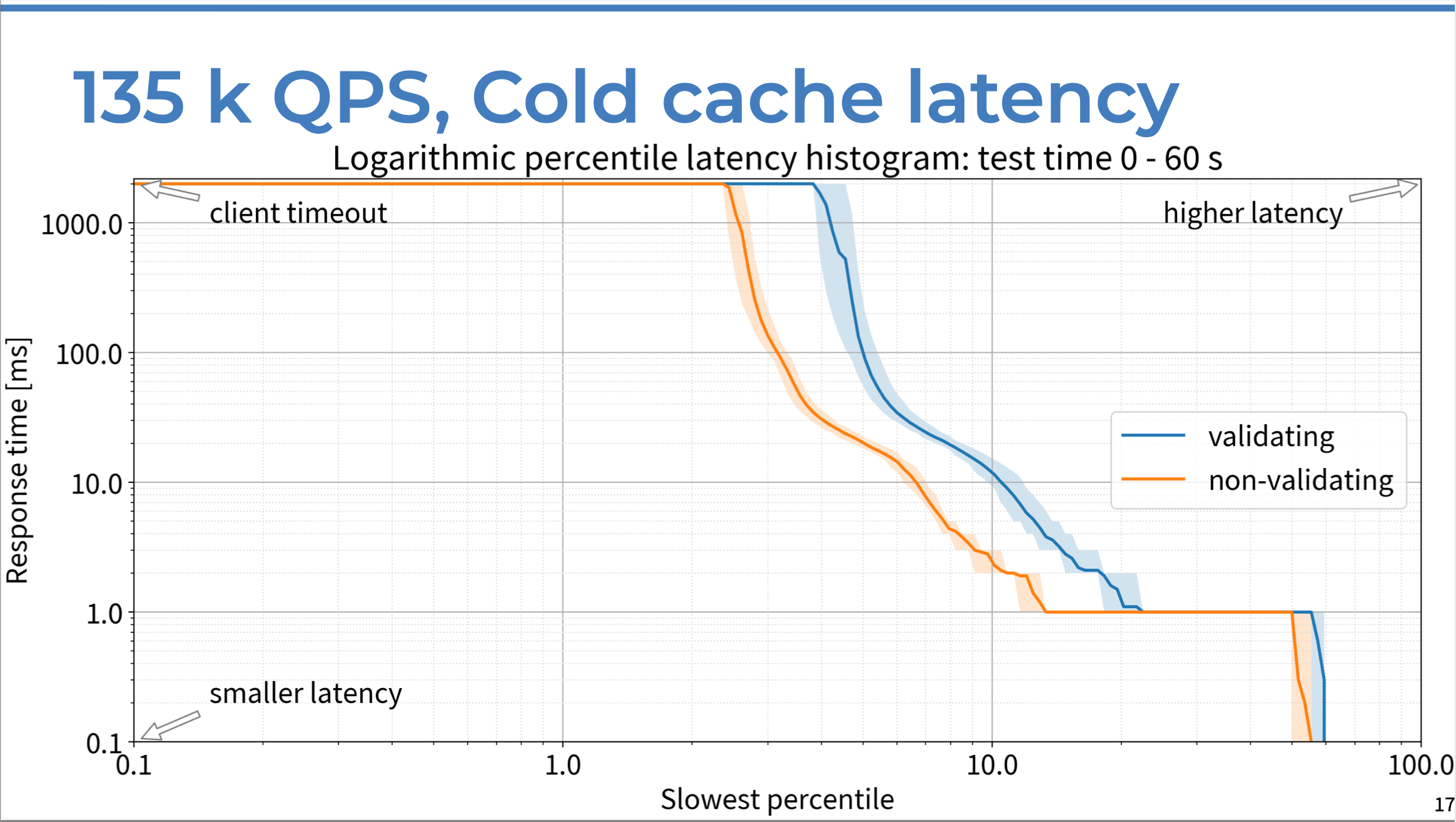

With a completely empty cache, validation does make a difference in the first minute; the percentage of responses that did not arrive within the client timeout (the typical Windows client timeout is 2 seconds) increased. But we notice that in the very first minute, both validating and non-validating resolvers can’t keep up: they’re not warmed up and the cache is empty. More than 2% of queries were not answered in time for both validating and non-validating resolvers, so the issue is not with validation.

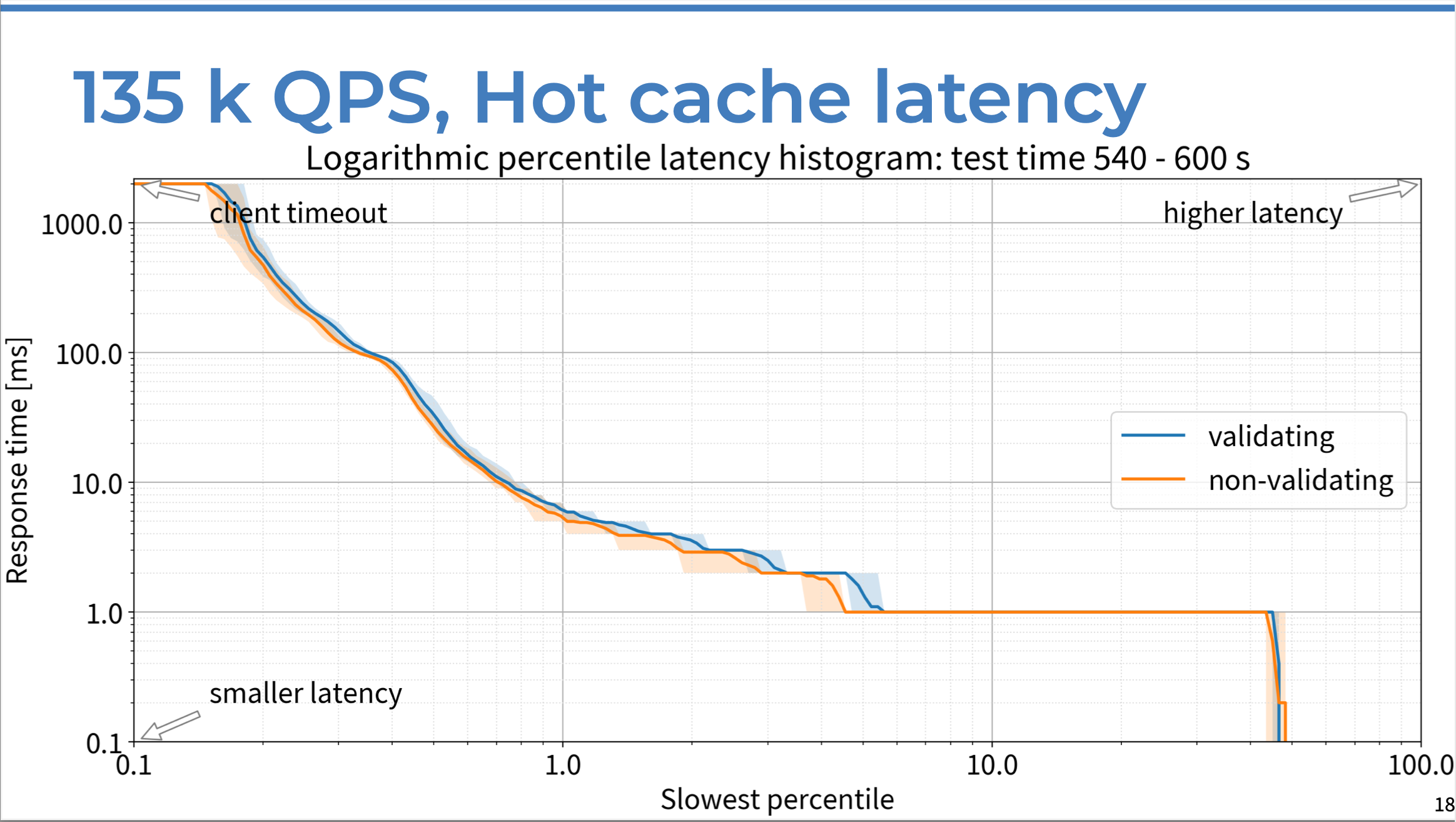

But in the second minute, it’s just slightly worse for validating, and as time goes on their performance converges. By the tenth minute, it’s hard to say if there’s a meaningful difference or not.

We can conclude that if there is any difference at all, it’s an approximately 1 ms penalty for approx. 1-2% of queries. That’s the only impact of DNSSEC validation on latency for a busy resolver handling 135,000 QPS.

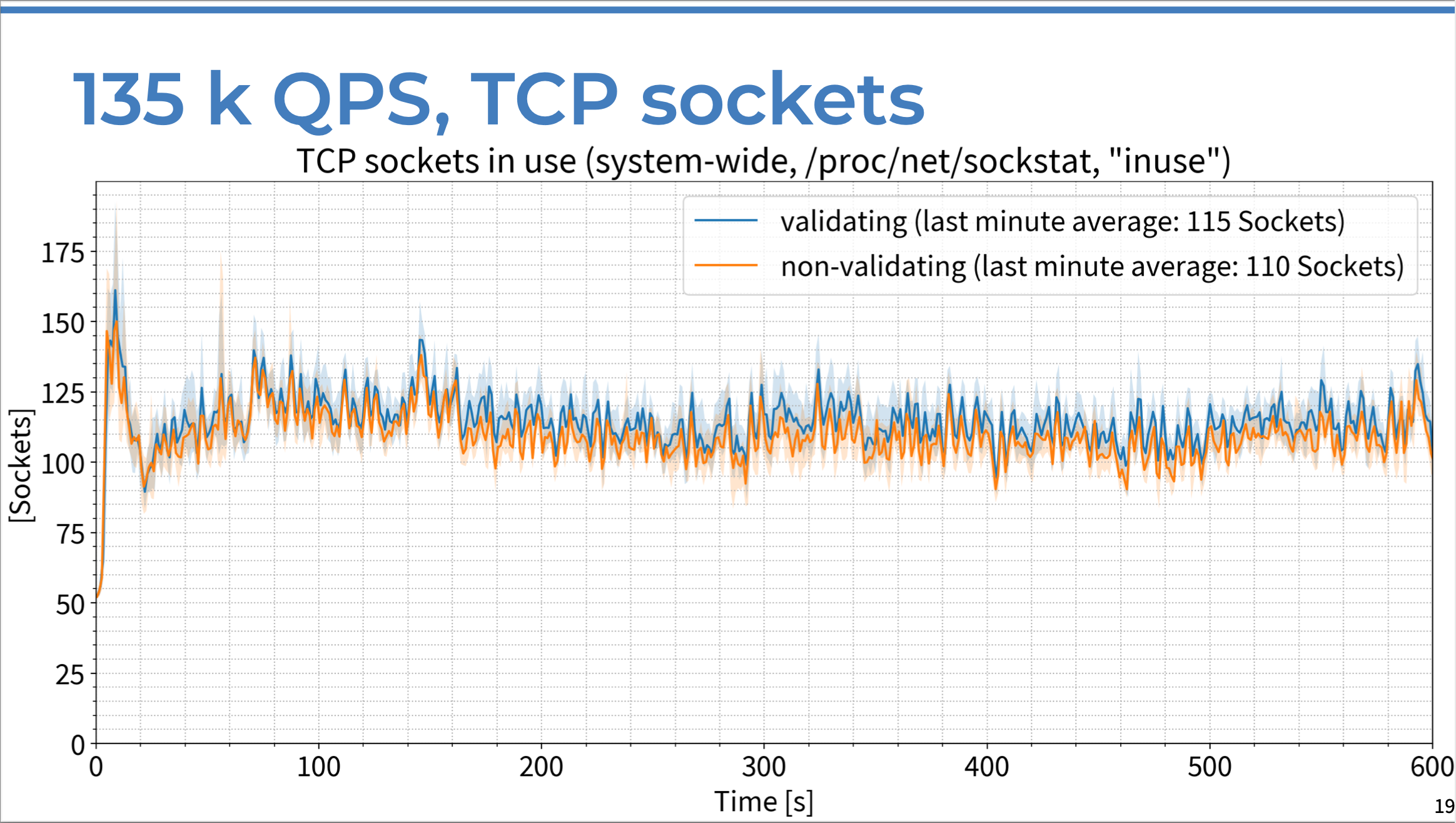

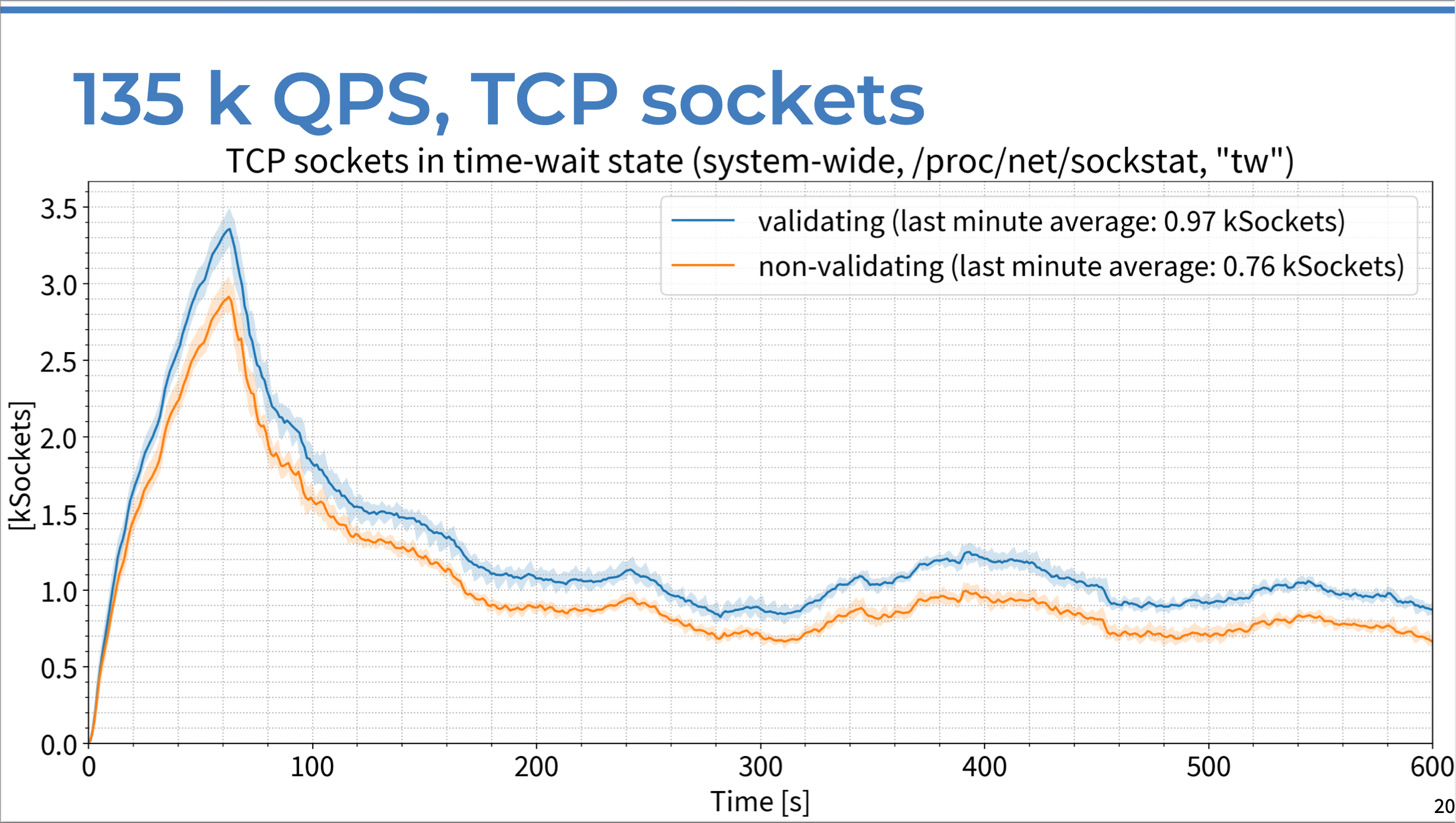

Looking again at TCP sockets, is there any difference on the resolver with lots of queries and validation enabled? There is, but it’s very small: only five more sockets in use, on average, on a resolver handling 135,000 QPS.

If we look at TCP sockets in the time-wait state, there are about 200 more TCP sockets in use on the validating resolver - but again, this is not a significant number, and does not require any OS configuration changes to enable more open file descriptors at the same time.

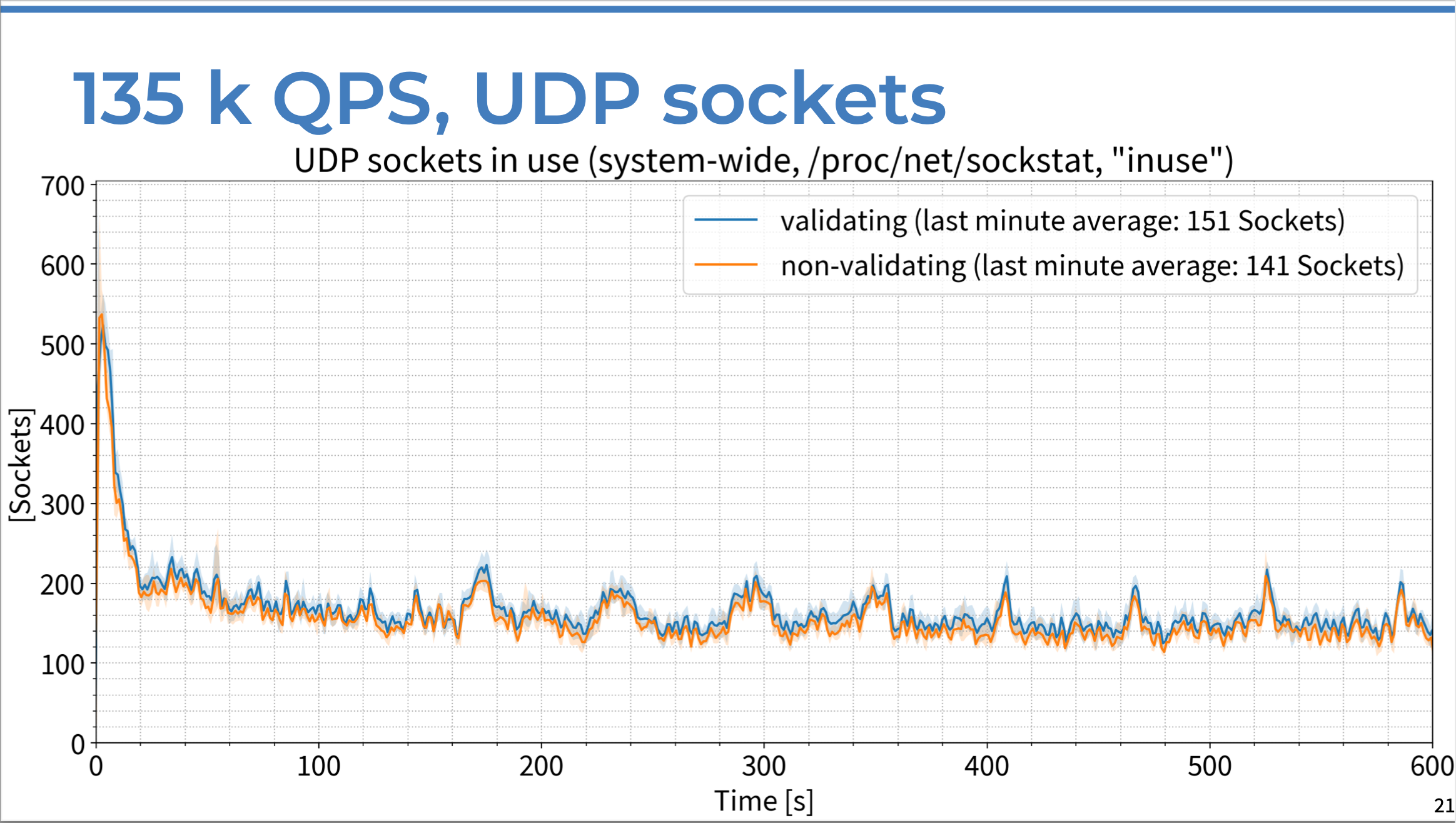

As for UDP sockets, we again see only a very small difference between the validating and non-validating resolvers.

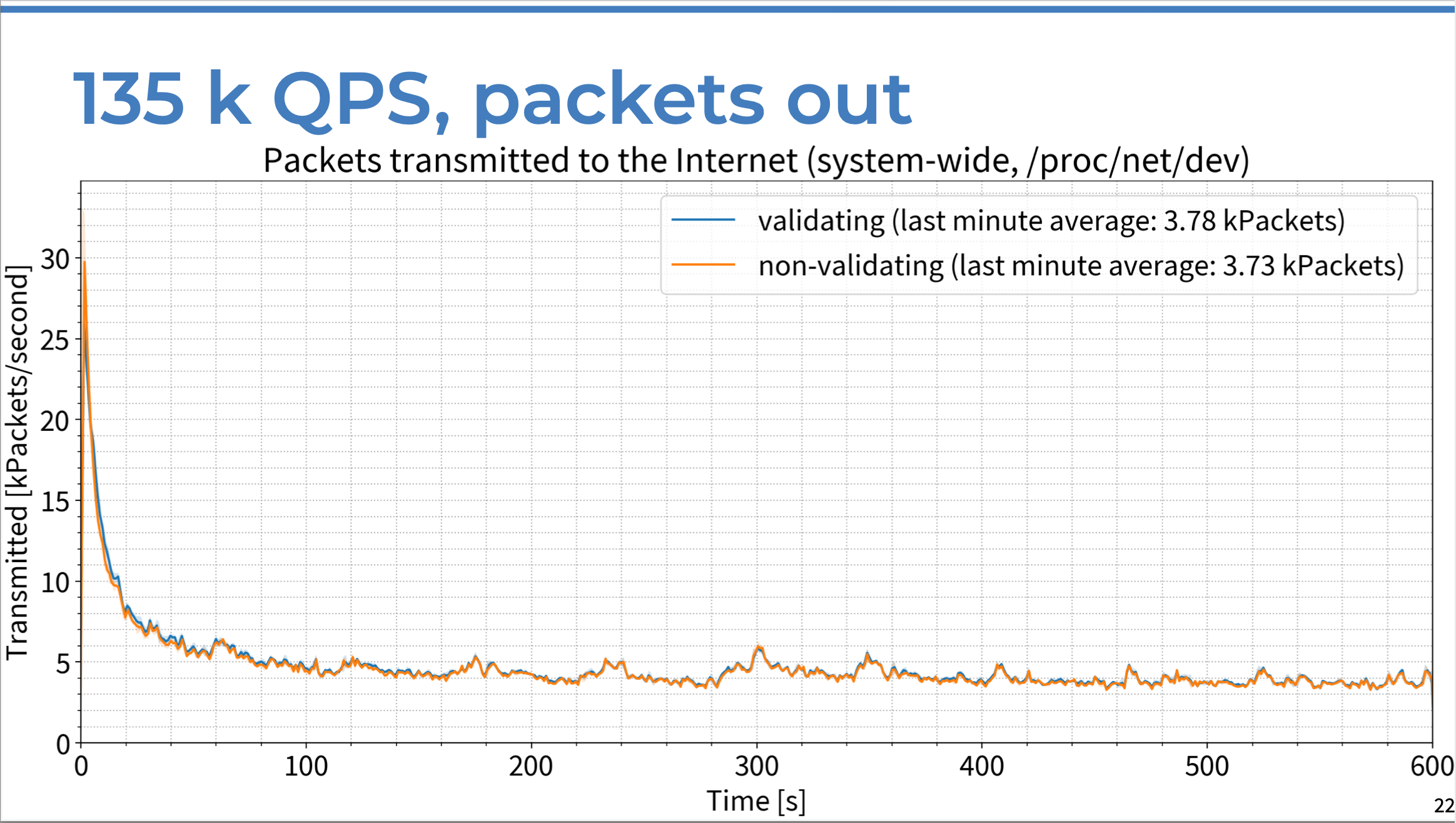

When we look at the number of packets being sent out with 135,000 QPS, we do see a small difference. However, it’s approximately 50 packets per second more being sent out from the validating server than from the non-validating server, which is not even noticeable.

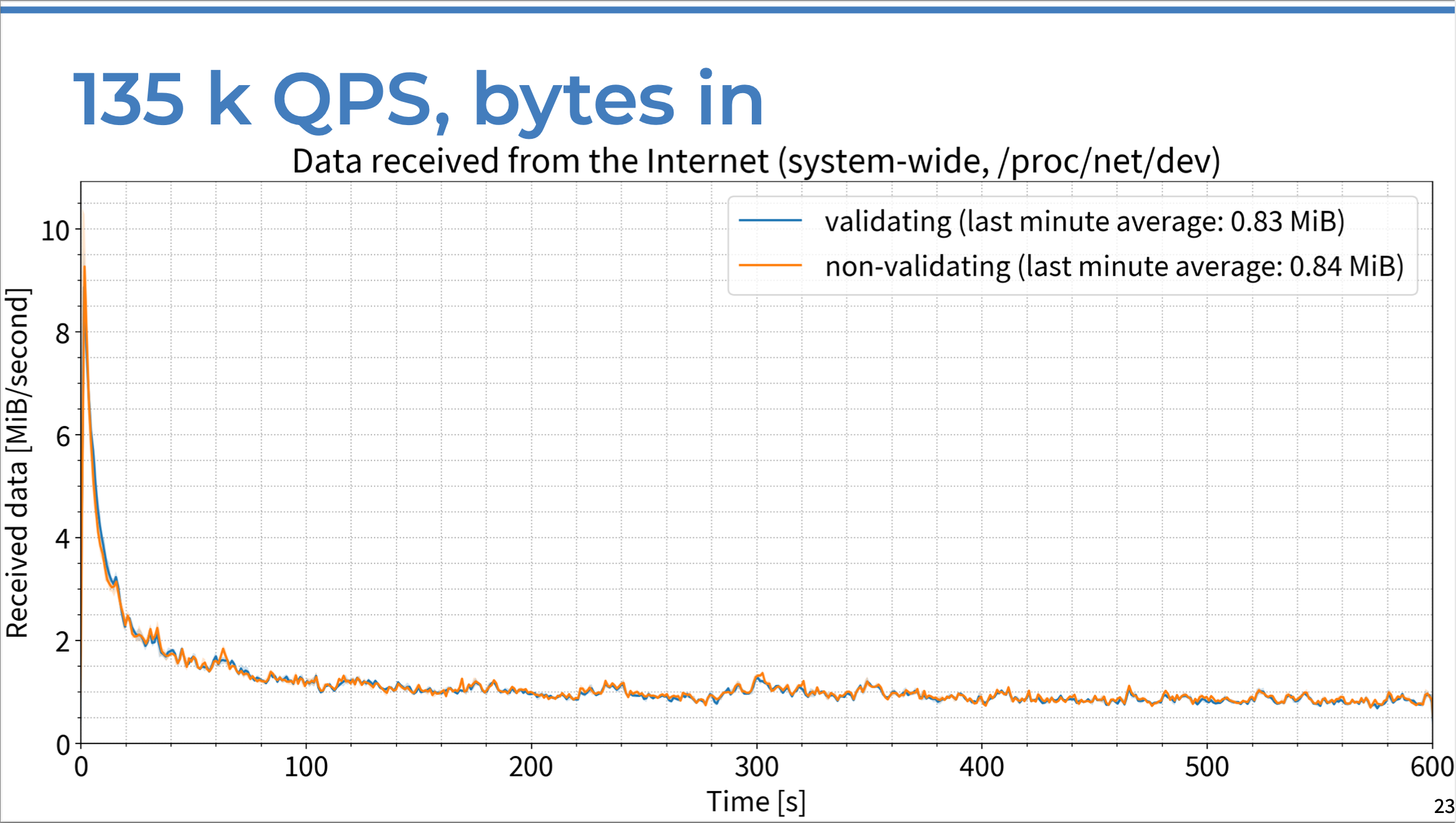

And for the number of bytes received by the server, the difference is not measurable.

Surely, the CPU must be burning now, right? Again, that is not the case. We saw a slight difference in CPU usage in the first couple of minutes of our tests, but after that the two sets of data quickly converge. By the tenth minute, we saw only a 22% increase in CPU time consumption by the validating resolver, which is one-quarter of one CPU core - nothing you would notice in practice.

Once again, this is the only chart where we see any significant difference between validating and non-validating. With 135,000 QPS, memory use increased by approximately 9% for validating servers, averaged over the last minute of the test. Of course it requires more memory to store the larger amount of data, but not a very significant amount.

DNSSEC validation has a negligible impact on server latency, bandwidth usage, CPU time consumption, and the number of OS sockets. The only area of our tests in which we saw a significant impact from DNSSEC validation was memory consumption, which increased by about 10% with validation.

For those who still think this too hard to believe, it’s worth considering that:

If you are looking for the truth and not speculation you can also measure the impact yourself, using your own traffic capture. The measurement tools are open source! See this video. For more background on resolver performance testing and this test bed, see this earlier blog.

This article recaps the highlights of a presentation given at DNS OARC 38. The slides and a recording of the full presentation are available on the ISC presentations page.

What's New from ISC